Using Bidirectional path tracing with Irradiance caching in EDXRay

Recently I have implemented irradiance caching in the renderer I have been independently developing, EDXRay. This enabled the renderer to synthesize noiseless images in a short amount of time.

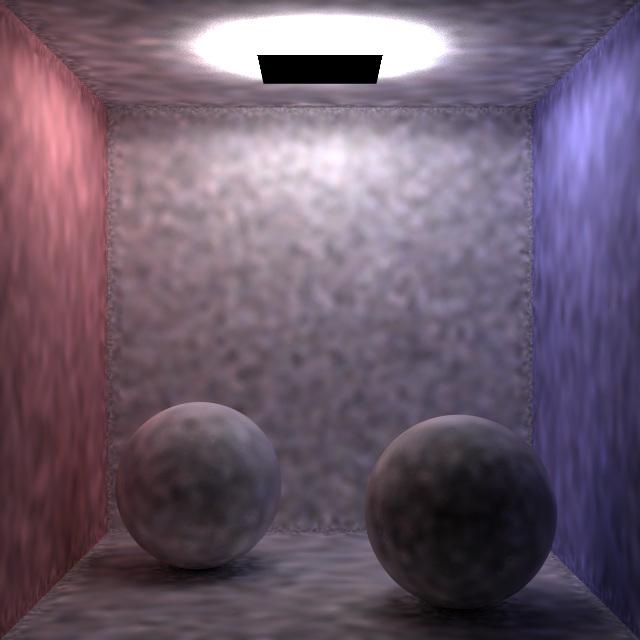

The error function follows this work. Irradiance samples are distributed evenly in screen space. My implementation allows me to flexibly integrator used to calculate irradiance. Currently it supports both the 2 GI integrators originally in EDXRay: path tracer and bidirectional path tracer. For scenes where lights can easily be reached by sampling path from the camera, path tracing would suffice. For scenes such as the one below, where the majority of lighting comes from indirect illumination, bidirectional path tracing can produce images that has much less artifacts. All the images shown in this post was rendered with Irradiance Caching, sample distribution clamped at 1.5x to 10x pixel area, and irradiance evaluation sample count is 4096.

Additionally, because bidirectional path tracing (BDPT) with multiple importance sampling is much better at rendering caustics and unidirectional path tracing, therefore in scenes that contain specular objects, using bidirectional path tracing to evaluate irradiance would also be much more efficient than path tracing, as shown below.

I am quite happy with the result. And I personally haven’t seen this rendering approach (evaluating irradiance with BDPT in irradiance cache) used in other renderers such as mitsuba, luxray. Probably because caustics can be handled even better by photon mapping. The image below was renderred with irradiance caching, even bidirectional path tracing was used to calculate irradiance, the caustics area below the glass ball still has blotchy artifacts. This is due to caustics is a special form of indirect light that changes quickly over the surface, thus making it not as suitable for interpolation.

When BDPT is used with irradiance caching, it’s essential that it has all kinds of path sampling techniques. Just like explicitly connecting light vertices to camera lens is important for rendering caustics in normal path tracing (because caustics can be sampled much more easily by light tracing), here connecting light vertices to irradiance sample location is also important to make the algorithm work efficiently.

The next step is to use gradient information of irradiance to do more accurate interpolation. I am also interested in implementing this work. They seem to be able to development a much efficient error metric.

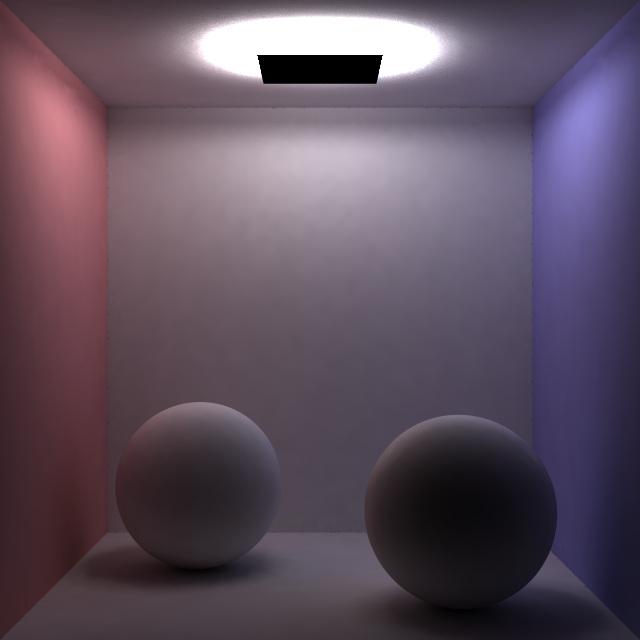

Lastly, I will post one more image rendered with my irradiance cache integrator, also 4096 bidirectional path samples were used to get irradiance samples. Just for fun, I also did another rendering just visualizing the indirect lighting in the same scene.

Leave a Comment